An intuitive tool that lets the user create music by touching objects around them, using a hand tracking library and image processing algorithms.

The project is an easy, intuitive, and fun way to create music using everyday surrounding objects, the user’s hands, and a computer with access to a camera and speakers.

The program lets the user define their desired objects by touching them, assigning musical notes in a pre-defined order. Afterwards, the user can touch the objects again to produce sounds of a musical instrument he chooses beforehand- piano (two options), guitar, and drums.

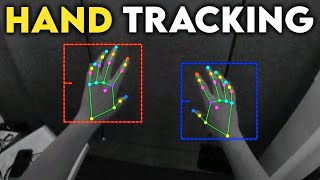

This is achieved by integrating MediaPipe (a pre-trained machine learning library from Google) that can identify hands from video in real-time, and image processing algorithms that can identify objects with an approximately uniform coloration.

Touch is identified using original method of recording distance to objects during an initial configuration stage, and sounds are produced using Pyglet multimedia library and downloaded music samples.

The project is a proof-of-concept upon which many improvements and new features can be added to create interesting and innovative tools, to be used in the comfort of your own room.