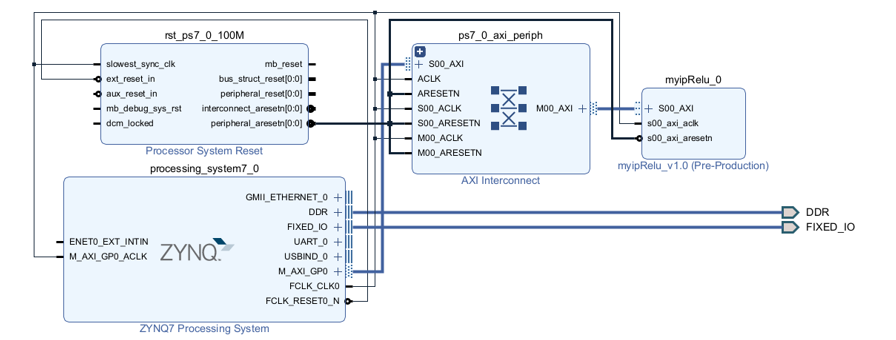

The project focuses on investigating common activation functions, specifically ReLU and GeLU, and examines the advantages and disadvantages of each. As part of the project, the ReLU function was implemented on the PYNQ Z2 board by creating an overlay in the Vivado environment.

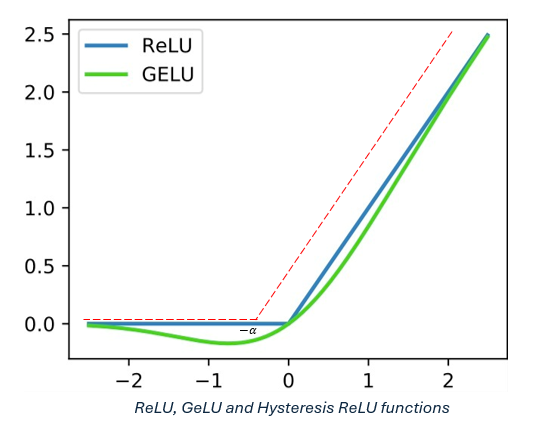

During the project, an in-depth understanding of neural networks was developed, focusing on their structure and various applications. Common activation functions, such as ReLU and GeLU, were explored, alongside the introduction of the new Hysteresis ReLU function. This function aims to balance the properties of existing functions, maximizing advantages while mitigating disadvantages. Additionally, knowledge and experience were gained in using and creating overlays for training neural networks on the PYNQ Z2 board, providing a platform for implementing neural networks on hardware systems.

In the second part of the project, experiments and investigations were conducted using Xilinx’s research tool, the FINN compiler. This tool offers an efficient solution for implementing and deploying neural networks on Xilinx boards, enabling the acceleration of neural networks and the creation of custom data flow architectures. The FINN tool also performs optimizations that can improve network performance, deepening the understanding of the importance of hardware-oriented optimization for achieving improved performance in running neural networks.